February 10, 2009

By Michael McIntosh, Senior Search Software Engineer

TNR Global

We use Microsoft FAST ESP to power a large industrial search engine listing over 1 million companies and over 3 million indexed documents and receiving millions of visitors every month. I have been working with ESP since 2003 (then known as FDS 3.2).

Microsoft FAST ESP is extremely flexible and can deal with indexing many document types (html, pdf, word, etc). It has a very robust crawler for web documents and you can use their intermediary FastXML format to load custom document formats into the system or use their Content APIs.

One of my favorite parts of the engine is its Document Processing Pipeline which lets you make use of dozens of out-of-the-box processing plugins as well as using a Python API to write your own custom document processing stages. An example of a custom stage we wrote was one that looks at a web site URL and tries to identify which company it belongs to so additional metadata can be attached to a web document.

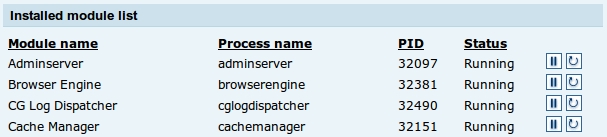

It has a very robust programming/integration SDK in several popular languages (C++/C#/Java) for adding content and performing queries as well as fetching system status and managing cluster services.

ESP has a query language called FAST Query Language (FQL) that is very robust and allows you to do basic Boolean searches (AND, OR, NOT) as well as phrase and term proximity searches. In addition to that, it has something called “scope search” which can be used to search document metadata (XML) that has a format that can vary from document to document.

In terms of performance, it scales fairly linearly. If you benchmark it to determine how it performs on one machine, if you add another machine it generally can double performance. You can run the system on one machine (only recommended for development), or many (for production). It is fault-tolerant (it can still serve some results if one of your load-balanced indices goes offline) and it has full fail-over support (one or more critical machines could die or be taken offline for maintenance and the system will continue to function properly)

So, its very powerful. The documentation nowadays is pretty good. So, you ask, what are the downsides?

Well, if the data you need to make searchable has a format that changes frequently, that might be a pain. FAST ESP has something called an “Index Profile” which is basically a config file it uses to determine what document fields are important and should be used for indexing. Everything fed into ESP is a “document”, even if your loading database table rows into it. Each document has several fields, typical fields being: title, body, keywords, headers, documentvectors, processingtime, etc. You can specify as many of your own custom fields as you wish.

If your content maintains mostly the same format (like web documents) its not a big issue. But if you have to make big changes to which fields should be indexed and how they should be treated, you probably need to edit the Index Profile. Some changes to the index profile are “Hot Updates”, meaning you can make the change and not interrupt service. But, some of the bigger changes are “Cold Updates” which requires a full data refeed and indexing before the change takes effect. Depending on the size of your dataset and how many machines are in your cluster, this operation could take hours or days. Cold Updates are a pain to schedule unless you have plenty of cash for extra hardware that you can bring online while your production systems are performing a cold update and reloading the data. Having to do that on production clusters more than once or twice a year requires a fair amount of planning to get right with minimum or 0% downtime.

Learn more about some of the ways we help our customers get the most from their FAST installations.